Following the release of The Daily Princetonian’s sixth annual Frosh Survey, Data writers and editors analyze and compare the data with other surveys, including past Frosh Surveys and Senior Surveys. See our previous ‘Dlog’ on prospective majors for first-years and how they may be correlated with their top-choice colleges during their college search here.

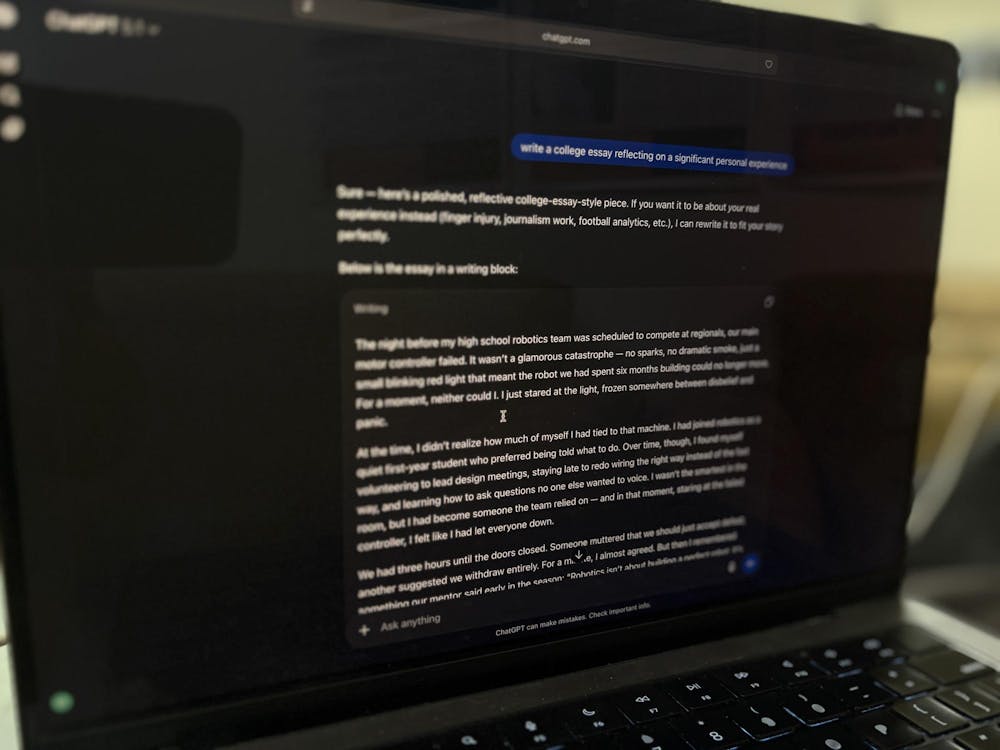

As AI tools become a routine part of how incoming freshmen write and study, Princeton’s admissions office continues to emphasize a very different message: authenticity. Even as the Class of 2029 reports record-high use of AI models, especially on admissions essays, the University maintains that application materials must reflect students’ own work, not the output of a chatbot.

The Daily Princetonian looked at Frosh Surveys from the classes of 2027 through 2029 to understand the shift in AI use and sentiments as ChatGPT and other large language models (LLMs) have become more popular. This year’s Frosh Survey shows the steepest decline yet in students arriving at Princeton without prior AI experience.

OpenAI released their first demo of ChatGPT on November 30, 2022, so the Class of 2027 was the first to have access to an LLM in high school.

Over the past three years, the percentage of students who had not used ChatGPT or other AI models before matriculation has decreased sharply, from 66 percent of the Class of 2027 to just 12 percent of the Class of 2029.

AI models have improved significantly between the Class of 2027’s graduation from high school and the Class of 2029’s: In 2023, only ChatGPT-3.5, the first public version of the model, was available, while last year, seniors in high school could use GPT-4o and GPT-4o mini. Since then, GPT-4.5, o1, o3, o4-mini, 4.1, o3-pro, and GPT-5 have all been released to the public within OpenAI’s different access tiers.

The model most recently accessible to the Class of 2029 before the Frosh Survey response window was ChatGPT o3, which was released in April 2025. This model was state-of-the-art on certain benchmarks, including Codeforces tasks and software engineering benchmarks.

While uptake in use for writing and reading schoolwork, administrative tasks, and coding was relatively linear, uptake in STEM and problem-solving schoolwork accelerated over the three years of the survey.

The survey only started asking about recreational use in 2024, and there was a 17 percentage point jump in respondents who had used AI for that purpose between the Class of 2028 (53 percent) and the Class of 2029 (70 percent).

Models have gotten better at different tasks at different rates.

OpenAI wrote in a release that the o3 model reached 88.9 percent accuracy on a qualifying exam for the United States Mathematical Olympiad, 83.3 percent accuracy on a standard benchmark dataset of PhD-level science questions created by domain experts, and a state-of-the-art mark of 69.1 percent accuracy on software engineering benchmark tasks.

With this improvement in math, code, and scientific performance, the percentage of those who used AI models to assist in STEM problem-solving for school work of the Class of 2029 was only four percentage points lower than the highest academic use case, reading and writing assistance.

Despite the rising sophistication of recent LLMs, first-years’ sentiment toward the rise of AI remains relatively similar across years. Around 20 percent of first-years responded that they think AI is revolutionary and around 47 and 50 percent responded that AI is useful in certain cases.

Compared to last year, there was an increase of almost 30 percentage points in respondents using some form of ChatGPT. At the same time, there was a statistically significant decrease in the number of people who used free Grammarly or Grammarly premium.

In a written comment to the ‘Prince,’ University spokesperson Jennifer Morrill wrote that application materials must reflect a student’s own authentic work, noting that AI-generated writing is “generally not going to be stronger” than what applicants produce themselves.

“Because Princeton has a writing-heavy curriculum, applicants do not set themselves up for success if they rely on AI in the application process,” wrote Morrill. “We expect applicants to be truthful and authentic when they sign off on their application, which confirms that the information and essays they have provided are their own work,” she continued.

First-years who do not use AI at all were the most skeptical, with over half labeling the rise of AI as dangerous. Students who used AI across any category tended to view it as either “useful in certain cases” or “revolutionary.”

Among active users, coding use cases stand out: Although only a small share found AI dangerous, over a third consider AI’s rise revolutionary, the highest of any group. Academic use cases such as STEM problem-solving and writing assistance show similar distributions.

First-years are entering Princeton with a background in AI use to assist with school work and administrative tasks. If past Frosh Surveys are any indication, as LLMs become more sophisticated, the proportion of those who use AI to complete their schoolwork will only increase.

With the current University policy delegating AI policymaking to the individual professor, many professors are finding ways to either adopt AI in the classroom and test material differently, or leave it at the door. No matter what, AI is here to stay, and has already transformed how incoming Princetonians do their work.

Vincent Etherton is a head Data editor for the ‘Prince.’

Please send any corrections to corrections[at]dailyprincetonian.com.