Five Princetonians are leading the charge into the field of artificial intelligence (AI), according to TIME Magazine’s 2023 TIME100 Artificial Intelligence list. Princeton's contingent includes prominent critics of the potential biases of AI, AI pioneers who have also warned of potential risks, and the CEO of an AI company focused on safety and alignment with human interests.

University computer science professor Arvind Narayanan, graduate student at the Center for Information Technology Policy (CITP) Sayash Kapoor, and three University alumni — Anthropic CEO Dario Amodei GS ’11, Stanford computer science professor Dr. Fei-Fei Li ’99, and former Google CEO Eric Schmidt ’76 — have all been honored as among 100 of the most influential individuals shaping the developments and dialogue surrounding AI.

Narayanan and Kapoor both work at the University’s Center for Information Technology Policy (CITP) which Narayanan directs. In 2019, Narayanan delivered a viral lecture titled “How to recognize AI snake oil” that showcased the flaws inherent in many predictive AI technologies, the outputs of which he argues are often shaped by human biases. Narayanan points to AI used by companies to evaluate job candidates as one type of machine learning where, rather than living up to ideals of impartiality, there are traces of human prejudice.

Kapoor, a Ph.D. student working under Narayanan, has come together with the professor to pen a forthcoming book about the phenomenon of what they call “AI snake oil,” slated to be published in 2024. The duo also maintains a popular Substack page of the same name.

Narayanan also leads the Princeton Web Transparency and Accountability Project, which aims to understand the user data that companies collect and what they do with it. He spoke with the working group on generative AI organized by the Office of the Dean of the College Jill Dolan. The group’s report is being reviewed by the Provost.

“My view is that predictive AI is fundamentally dubious. The reasons why the future is hard to predict are intrinsic and are unlikely to be rectified. Not all predictive AI is snake oil, but when it is marketed in a way that conceals its limitations, it’s a problem,” Narayanan wrote in an email to The Daily Princetonian.

Narayanan also elaborated on some of the ways in which University scholars are making strides in mitigating the harms associated with AI, including the likelihood of biases and the prospect of existential risks.

“Professor Aleksandra Korolova’s team has analyzed how ad targeting algorithms can be discriminatory and how they can be rectified. A team led by postdoctoral fellow Shazeda Ahmed has taken an ethnographic look at the insular AI safety community that has generated influential arguments and policy prescriptions around AI and existential risk,” Narayanan wrote.

“Professor Olga Russakovsky’s team builds advanced computer vision algorithms while also mitigating biases. Sayash and I have analyzed the impact of generative AI on social media. That’s just a small smattering of the ongoing projects,” he added.

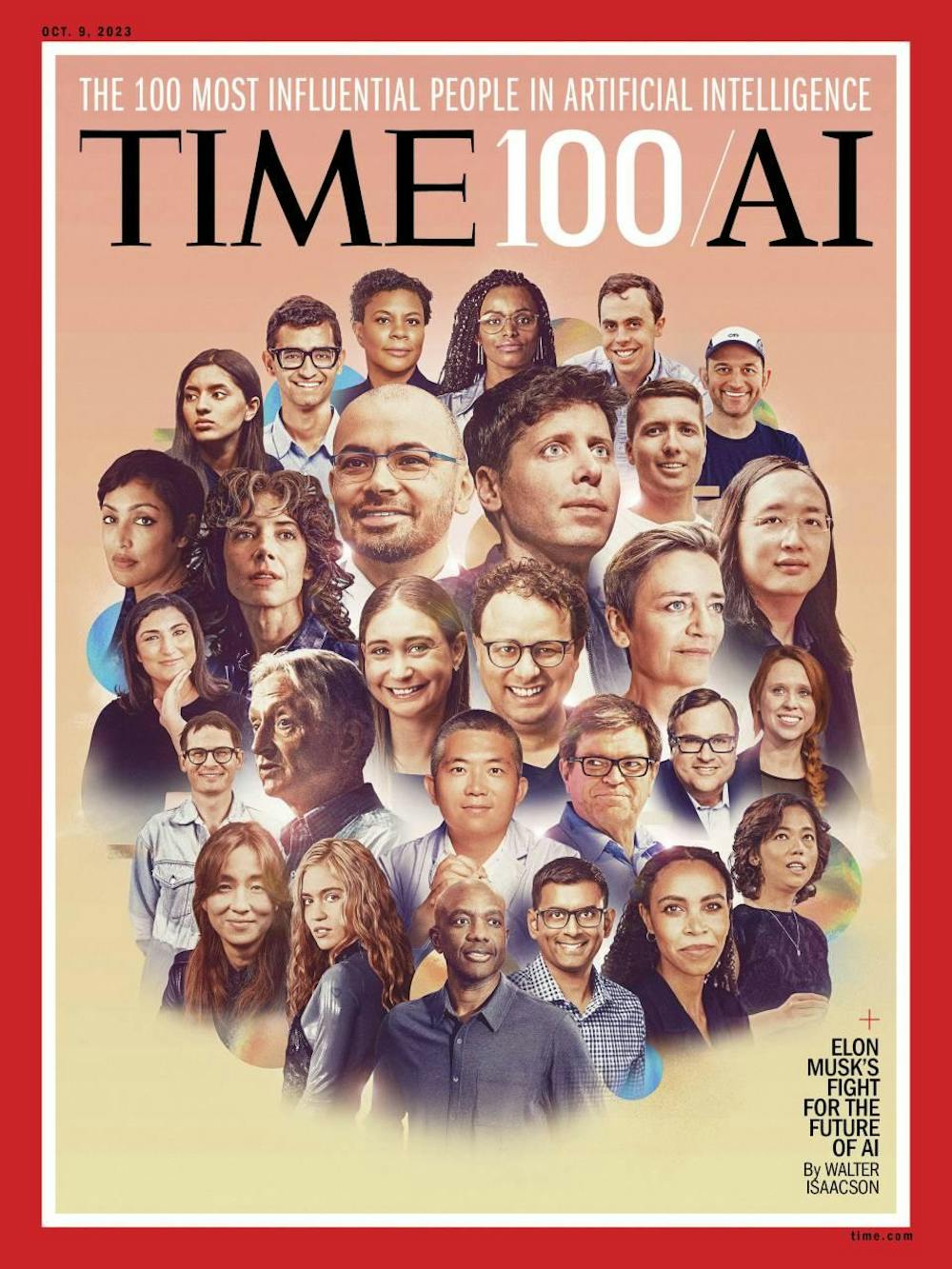

Canadian singer and songwriter Grimes and OpenAI CEO Sam Altman are among the other 95 prominent figures regarded by TIME as being at the forefront of contemporary AI developments.

“We wanted to highlight the industry leaders at the forefront of the AI boom, individuals outside these companies who are grappling with profound ethical questions around the uses of AI, and the innovators around the world who are trying to use AI to address social challenges,” wrote Naina Bajekal, the executive editor at TIME who helped to compile the inaugural TIME100 AI list.

Princeton alumni are also at the forefront of innovation in AI.

Li attended the University as an undergraduate, concentrating in physics and computer science before pursuing graduate studies in computer science at Caltech.

Li has served a pivotal role in expanding the diversity of individuals working within the fields of AI and computer science — alongside University associate computer science professor Olga Russakovsky, Li co-founded AI4ALL, a nonprofit organization that aims to promote diversity and inclusion initiatives in this line of work including through camps for high schoolers.

According to the Li’s TIME100 profile, the computer scientist was also instrumental in pioneering highly accurate AI image-recognition systems. Recently, Li has advocated for considerable government funding to spearhead the development of AI technologies in a safe and regulated manner.

After watching the 2023 film “Oppenheimer” with her children, Li remarked in her TIME100 interview that the safety concerns that plagued the discoveries of atomic fission mirror those surrounding today’s highly advanced AI technologies.

“I do resonate really, really deeply with the sense of responsibility that scientists have. We’re all citizens of the world,” Li said in the interview.

Schmidt is perhaps best known for his stint as CEO of Google from 2001–2011. While an undergraduate at the University, Schmidt initially studied architecture before pivoting to electrical engineering.

Much like Li, Schmidt, now the co-founder of Schmidt Features, fears that AI is transforming society at full force without requisite regulatory guardrails in place to prevent the dissemination of falsehoods on a vast scale. Specifically, Schmidt worries that the AI underlying many social media platforms could serve to undermine the integrity of political elections through the widespread publication of misinformation.

“You have this very large election in India, you have elections in many other democracies, and I don’t think that social media companies are ready for it. The deluge of fake videos, fake pictures, the ability to voicecast — I just don’t think we’re ready,” Schmidt said in his TIME100 interview.

Amodei was named an influential figure in AI by TIME100 alongside his sister, Daniela Amodei. The siblings are among the co-founders of Anthropic, a major global AI lab that aims to align AI technologies with human values.

Anthropic is renowned for its innovation of “Constitutional AI,” or what the TIME100 profile of the Amodei siblings describes as “a radical new method for aligning AI systems” through the explicit stipulation of principles that AI should adhere to.

At the University, Dario Amodei worked under neuroscience professor Michael Berry and physics professor William Bialek to complete his Ph.D. studies in physics. Furthermore, Amodei led the GPT-2 and GPT-3 teams at OpenAI prior to becoming involved with Anthropic.

During Amodei’s time at OpenAI, the organization received criticism for allowing pressure for funding to affect its founding ideal of transparency.

Amy Ciceu is a senior News writer for the ‘Prince.’

Please send any corrections to corrections[at]dailyprincetonian.com.